Table of contents

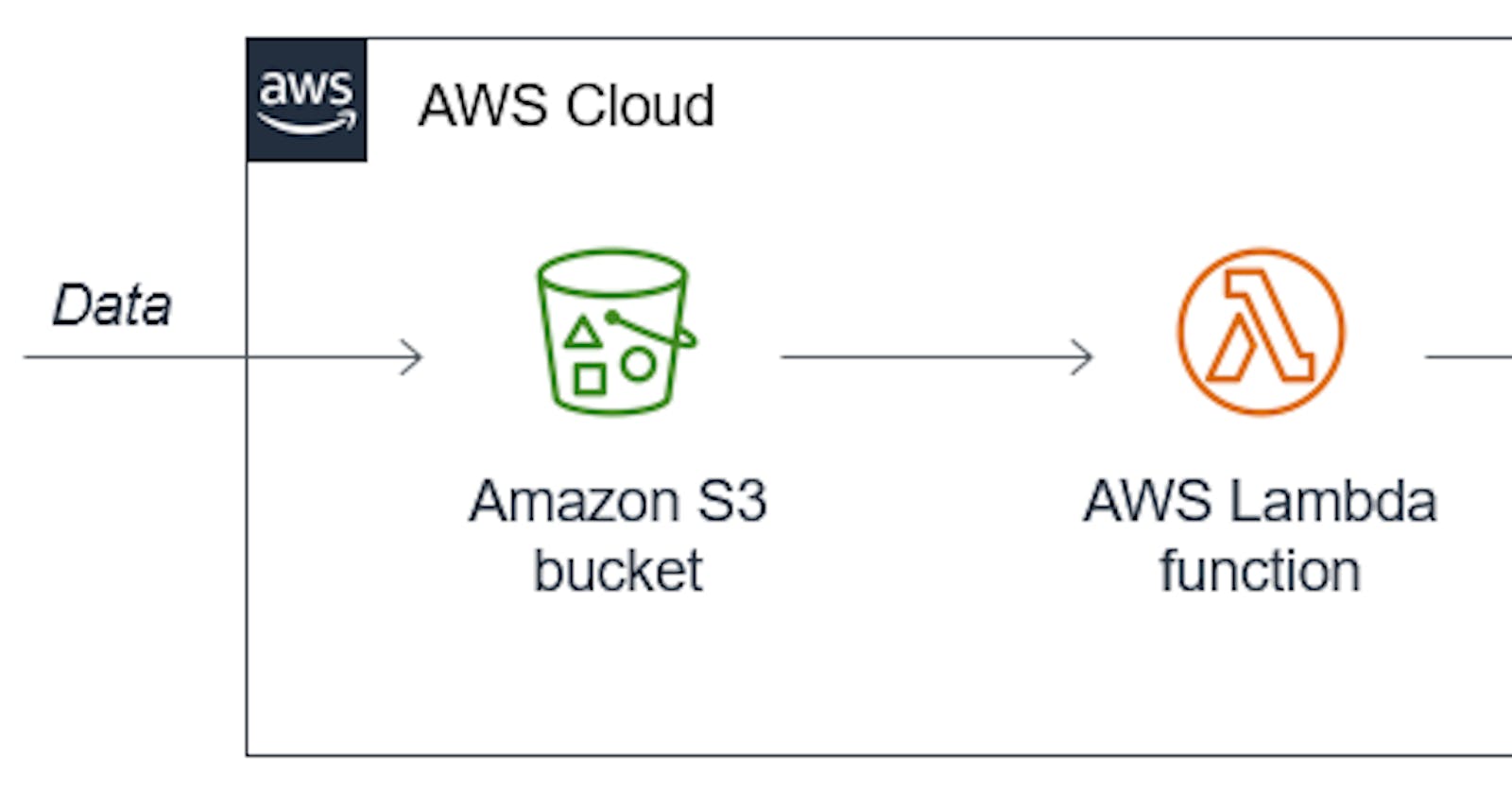

In this project we'll learn how to trigger lambda from S3 bucket and copy data information in dynamoDB.

Here in this project, we are going to complete the following steps:

Create an Amazon S3 bucket.

Create a Lambda function.

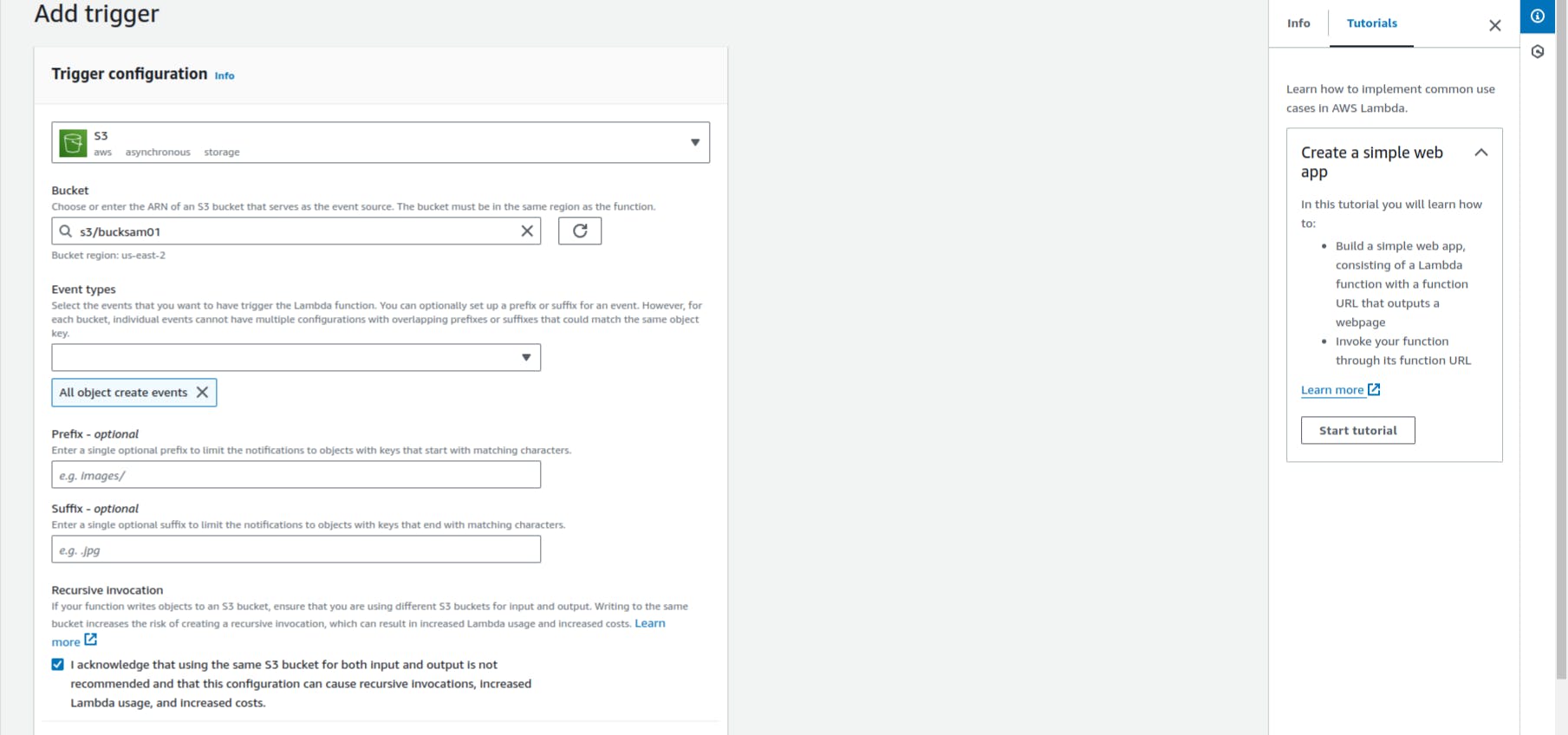

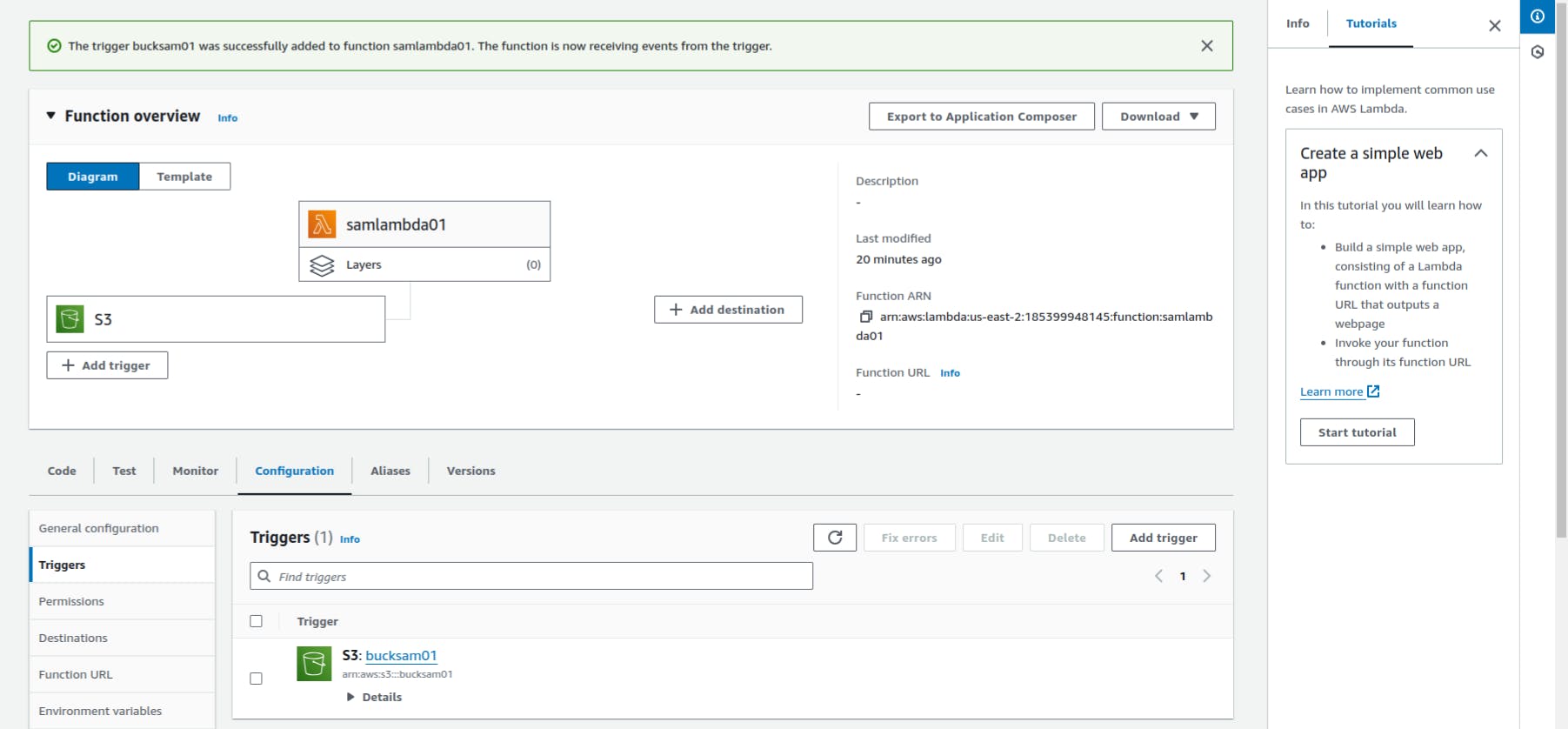

Configure a Lambda trigger that invokes our function when objects or application are uploaded in our bucket.

Copy data information in dynamodb.

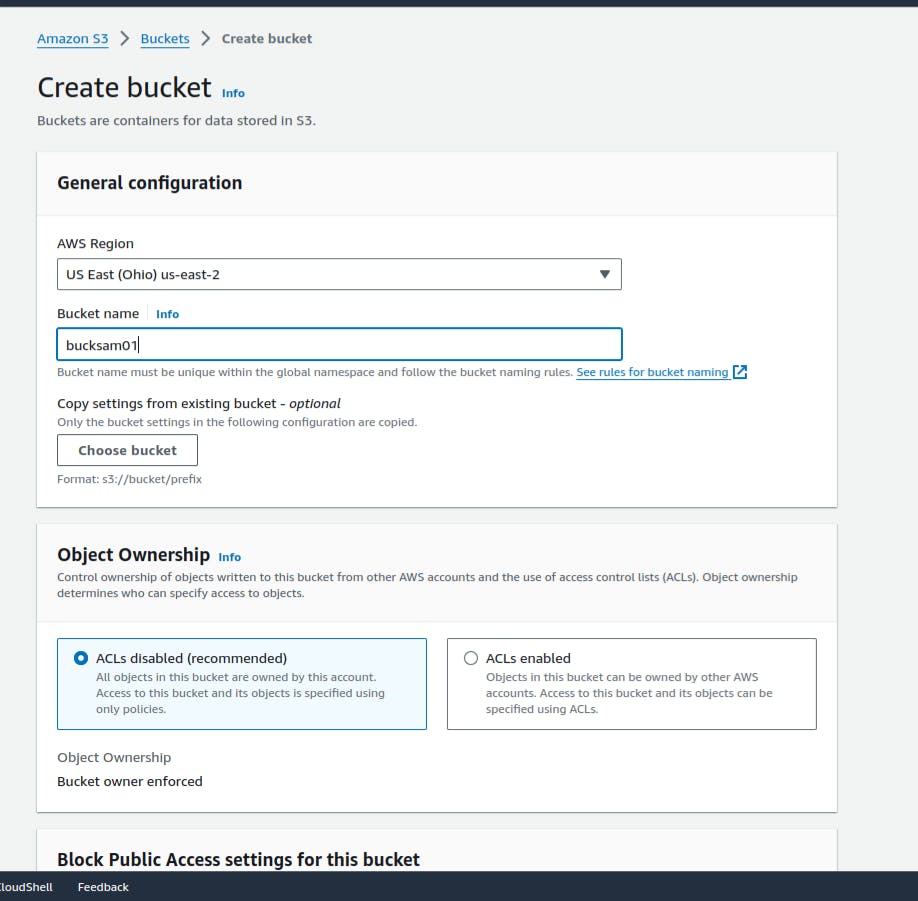

Step1:-Create an Amazon S3 bucket

We will be creating an S3 bucket and making content inside it publically accessible

Login into AWS and search s3 in the search bar

Click on Create Bucket

Now Enter the Bucket name it must be unique.Bucket names can contain only lowercase letters, numbers, dots (.), and hyphens (-).Keep all options as default. and then click on the "create bucket" button at the bottom.

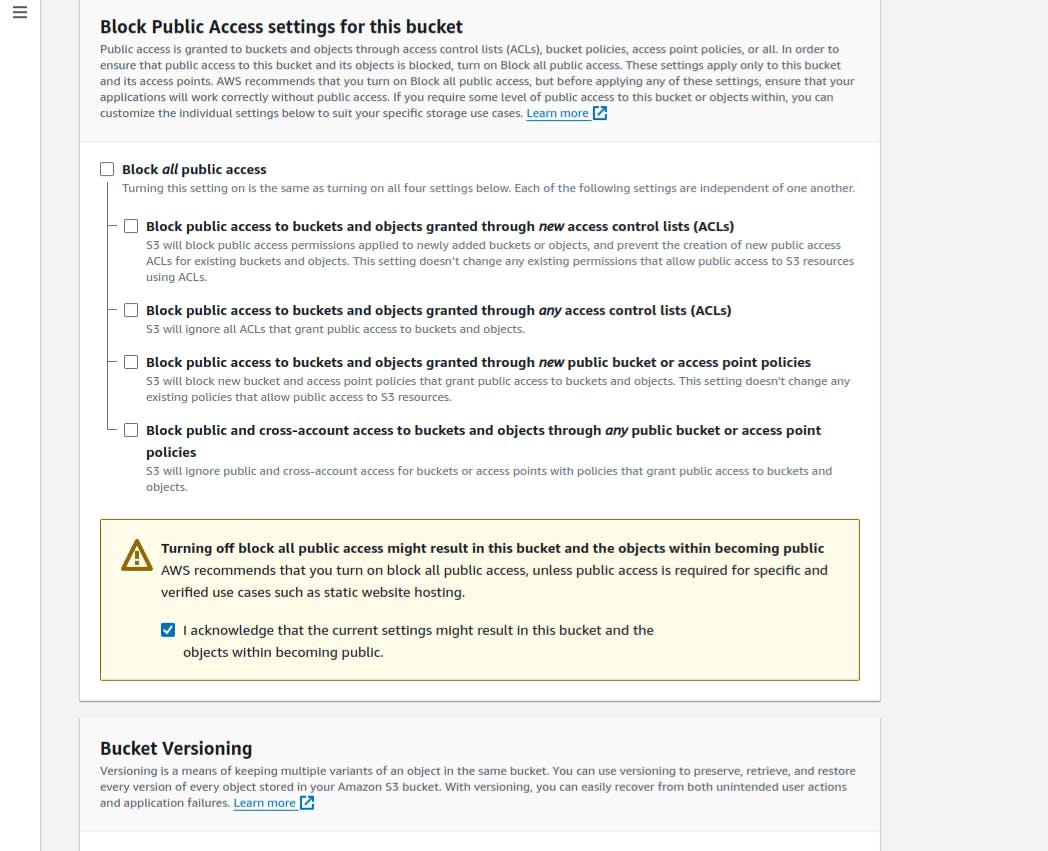

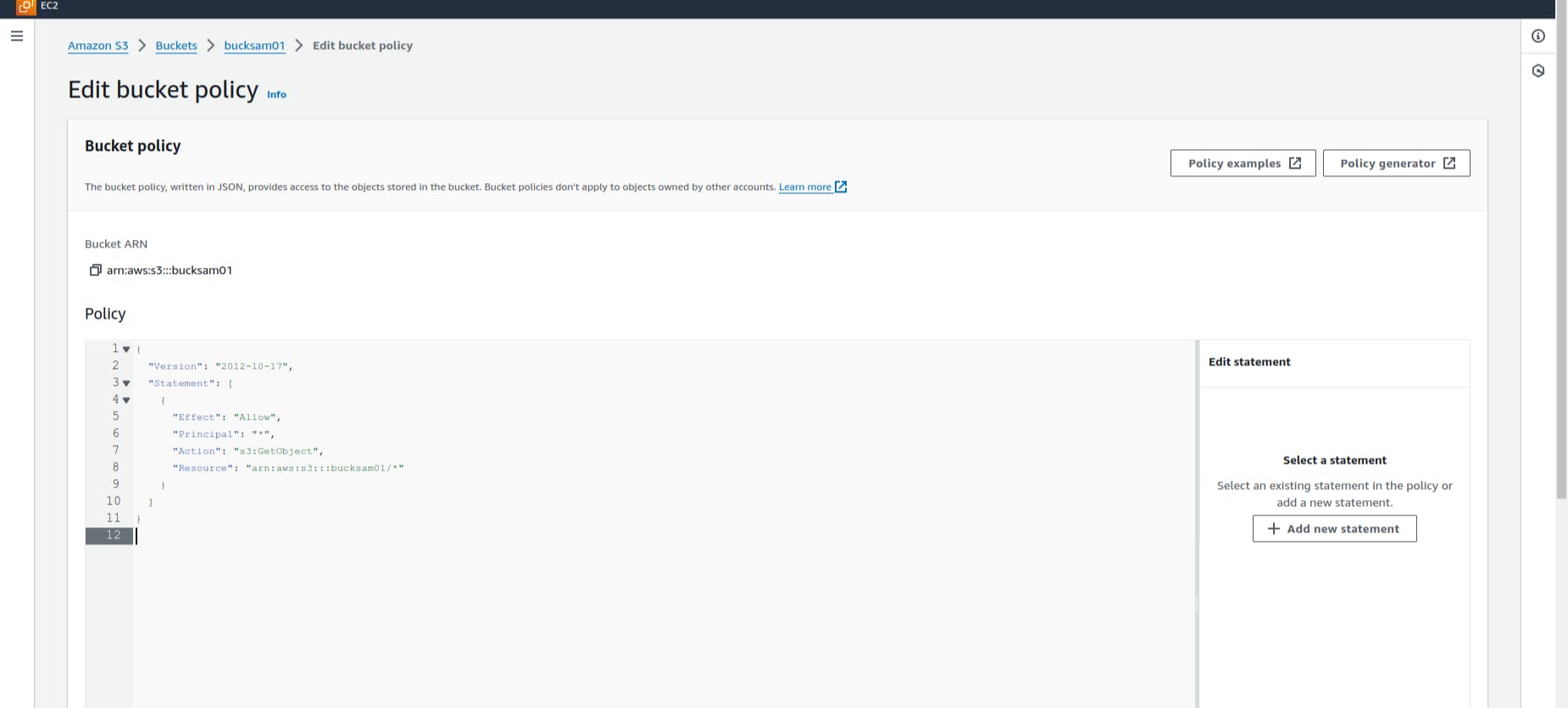

After Your bucket is created click on the "Permissions" tab. Now Move down and click on Edit button in "Bucket policy".

Update Bucket Policy like below. Keep in mind to add Your Bucket ARN. You can take it just from above

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": "<Your ARN>/*" } ] }

Now Our S3 Bucket is ready. All files put inside this bucket will be publically accessible.

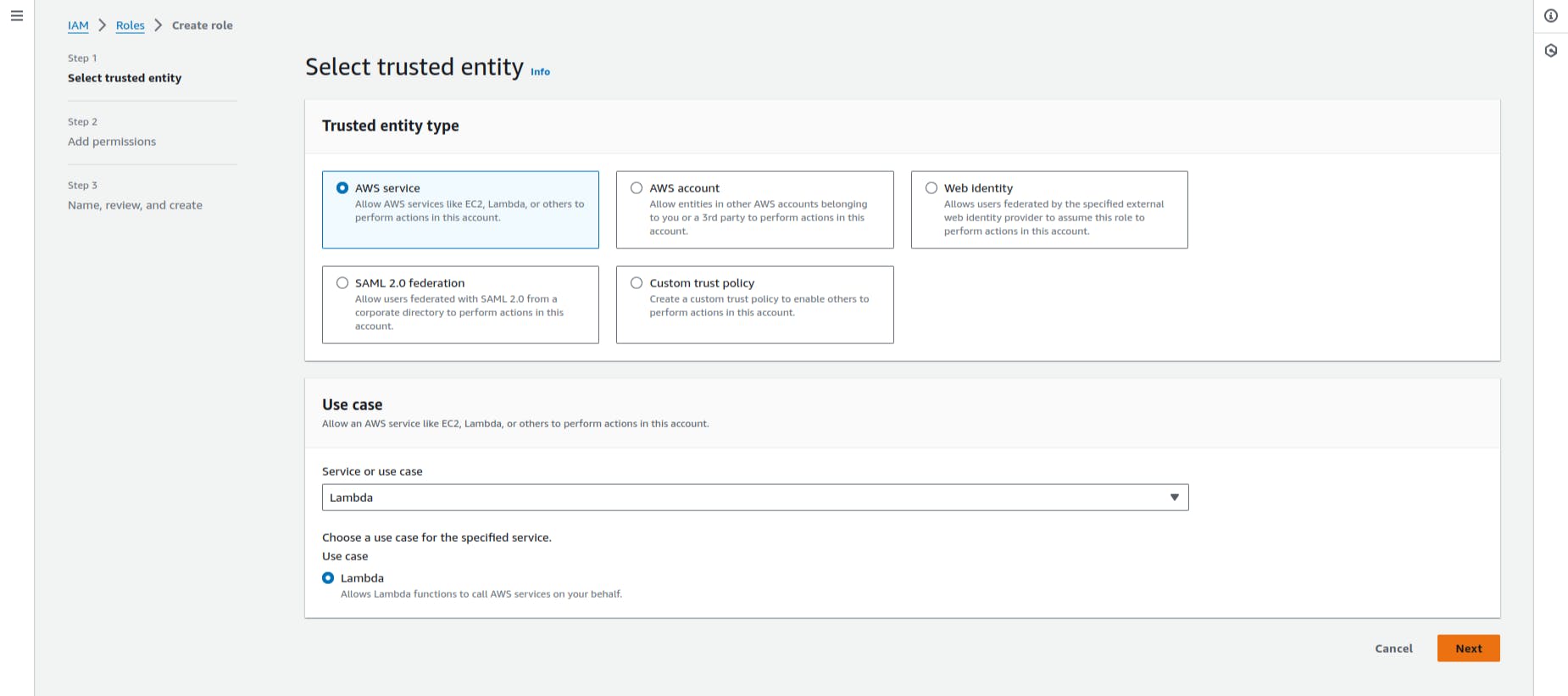

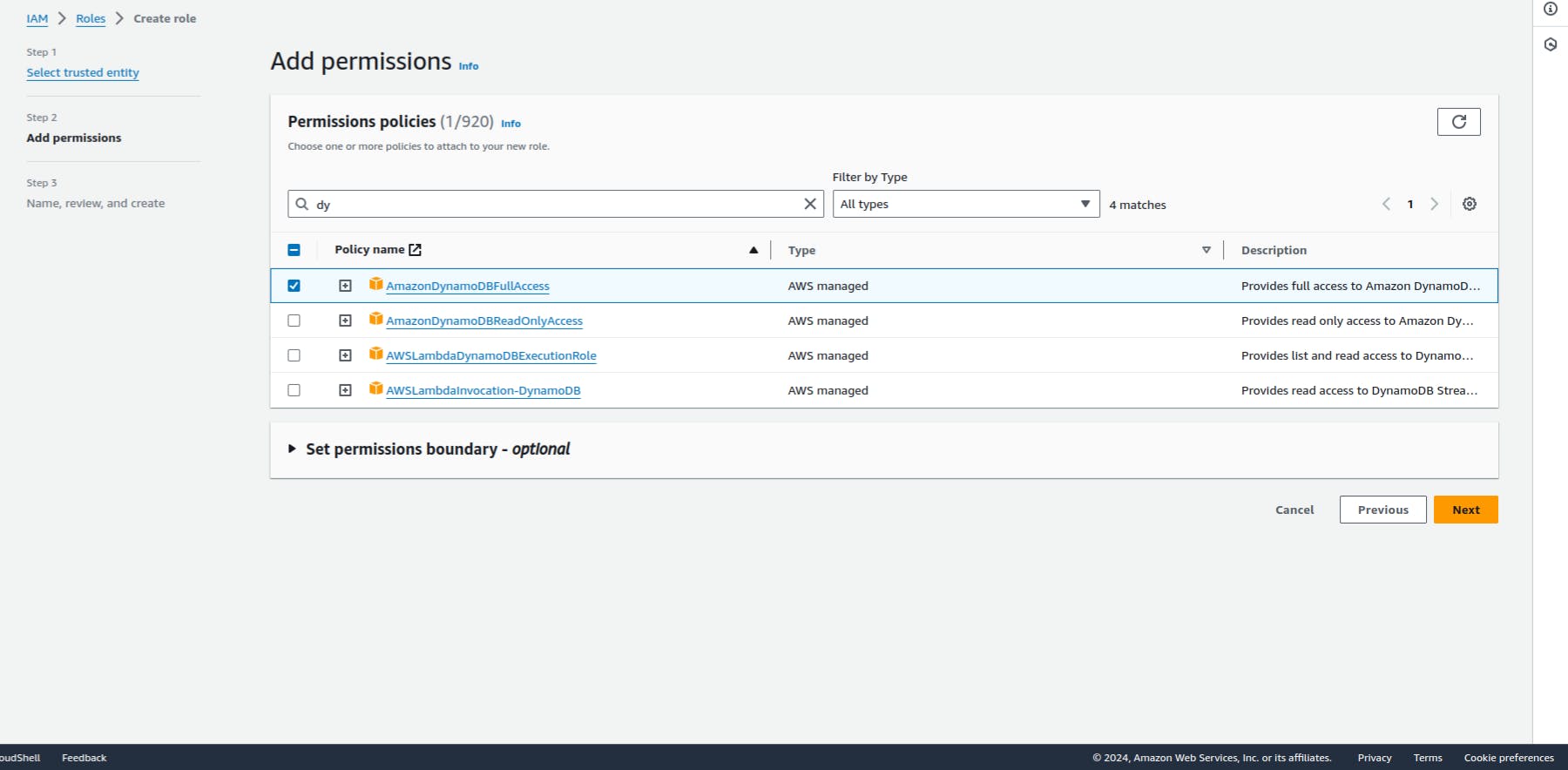

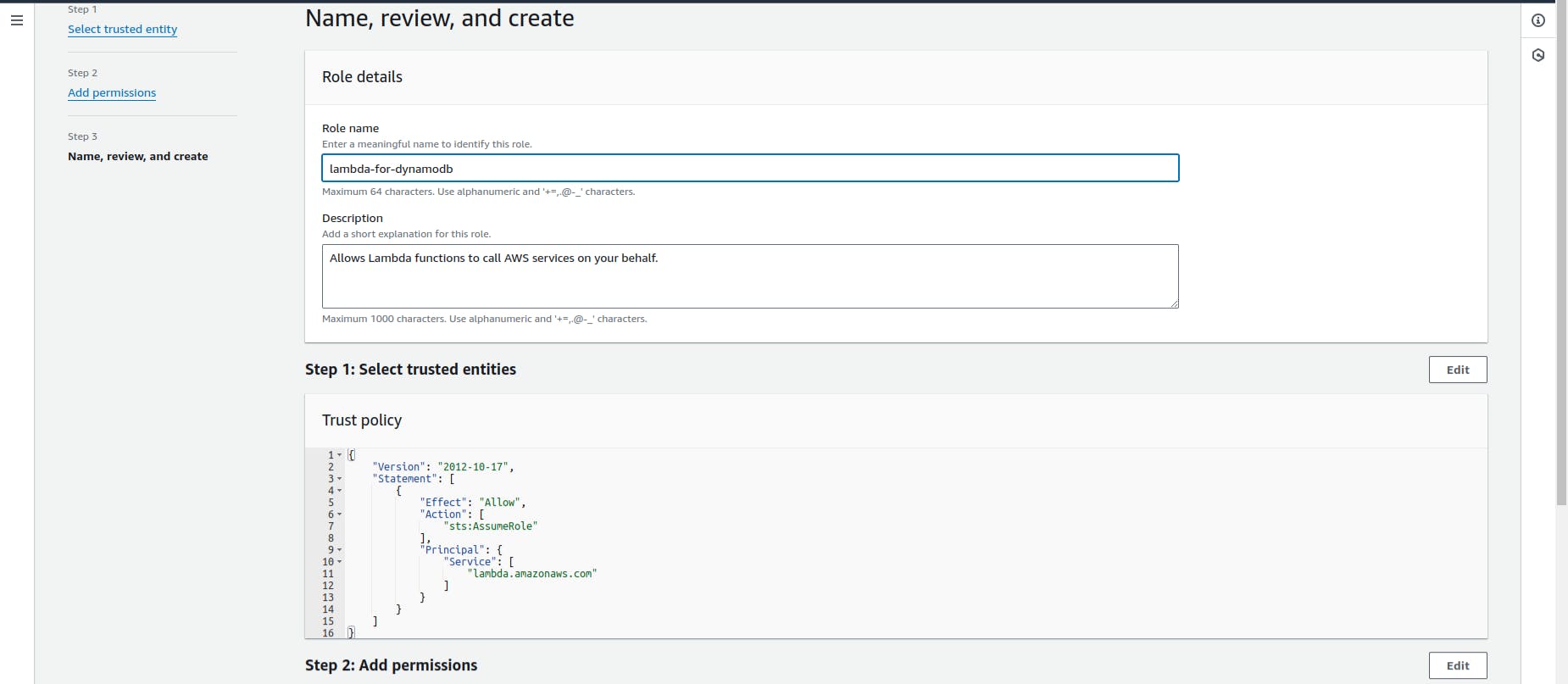

Step2:- Create a Role and permissions policy

Before we can create an execution role for our Lambda function, first we create a permissions policy to give our function permission to access the required AWS resources. The policy allows Lambda to get objects from an Amazon S3 bucket.

To create the policy

Open the policies page of the IAM console.

Choose Create role.

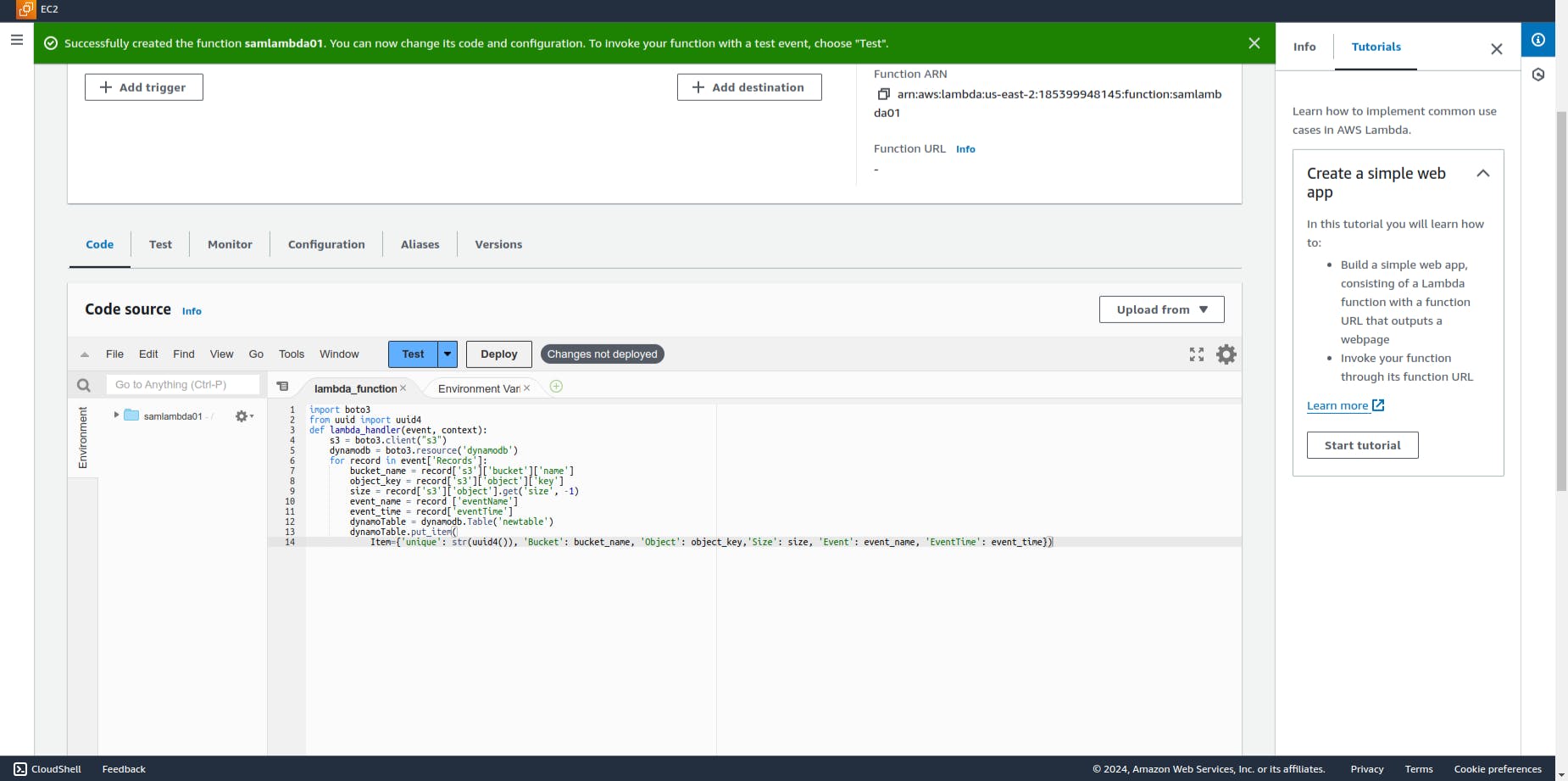

Step3:- Create a Lambda Function

Search lambda in the search bar then click on it.

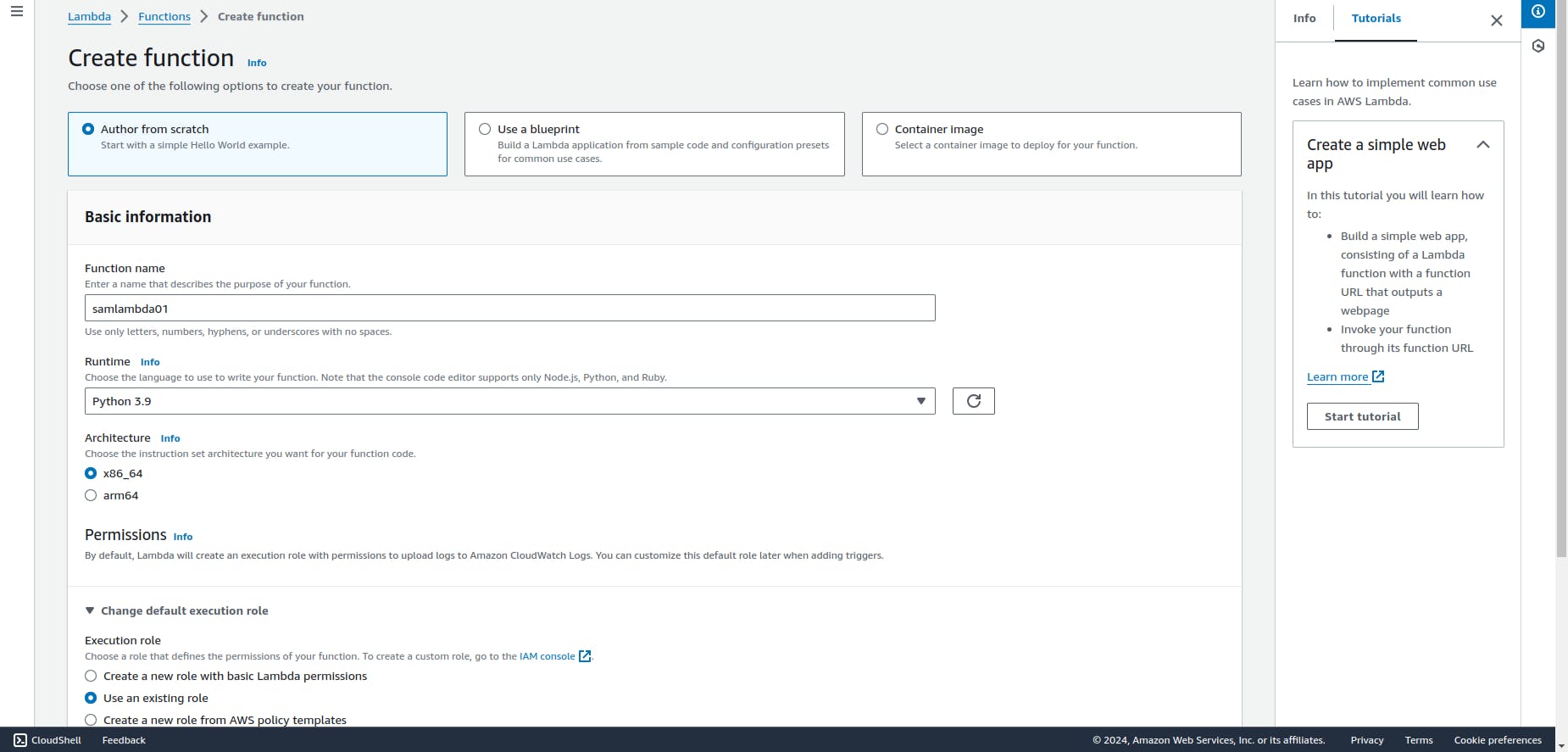

Now Click on Create Function

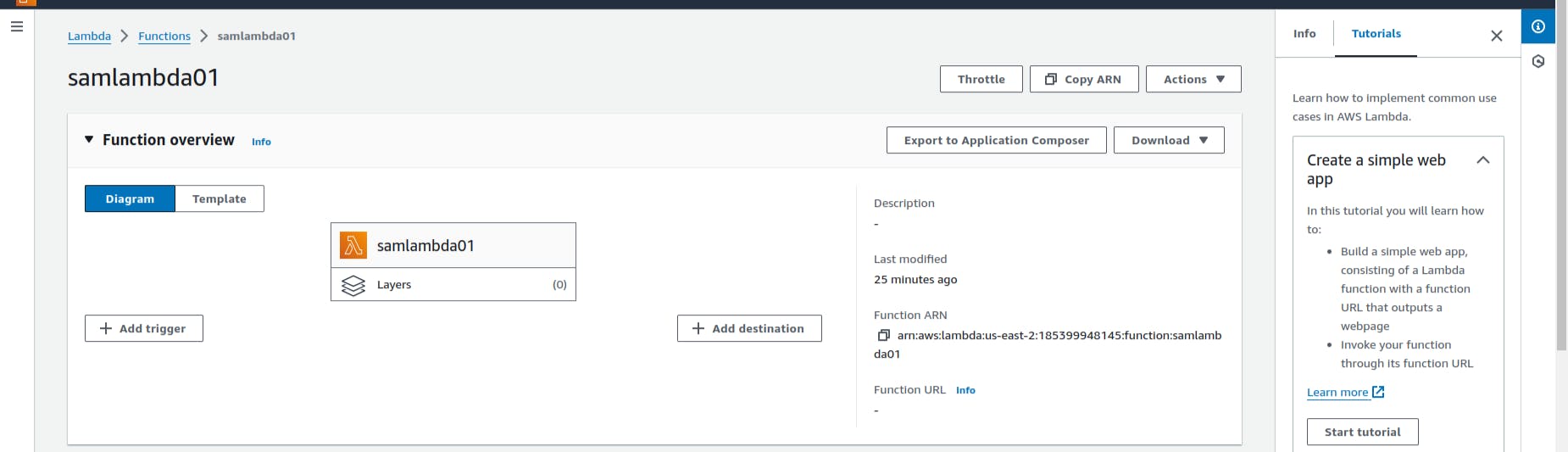

Select the First Option "Author from scratch" and then Enter the Function name. Also, Runtime should be selected as python 3.9. Keep Everything default. and Just click on the "Create Function" button.

Click on add triggers

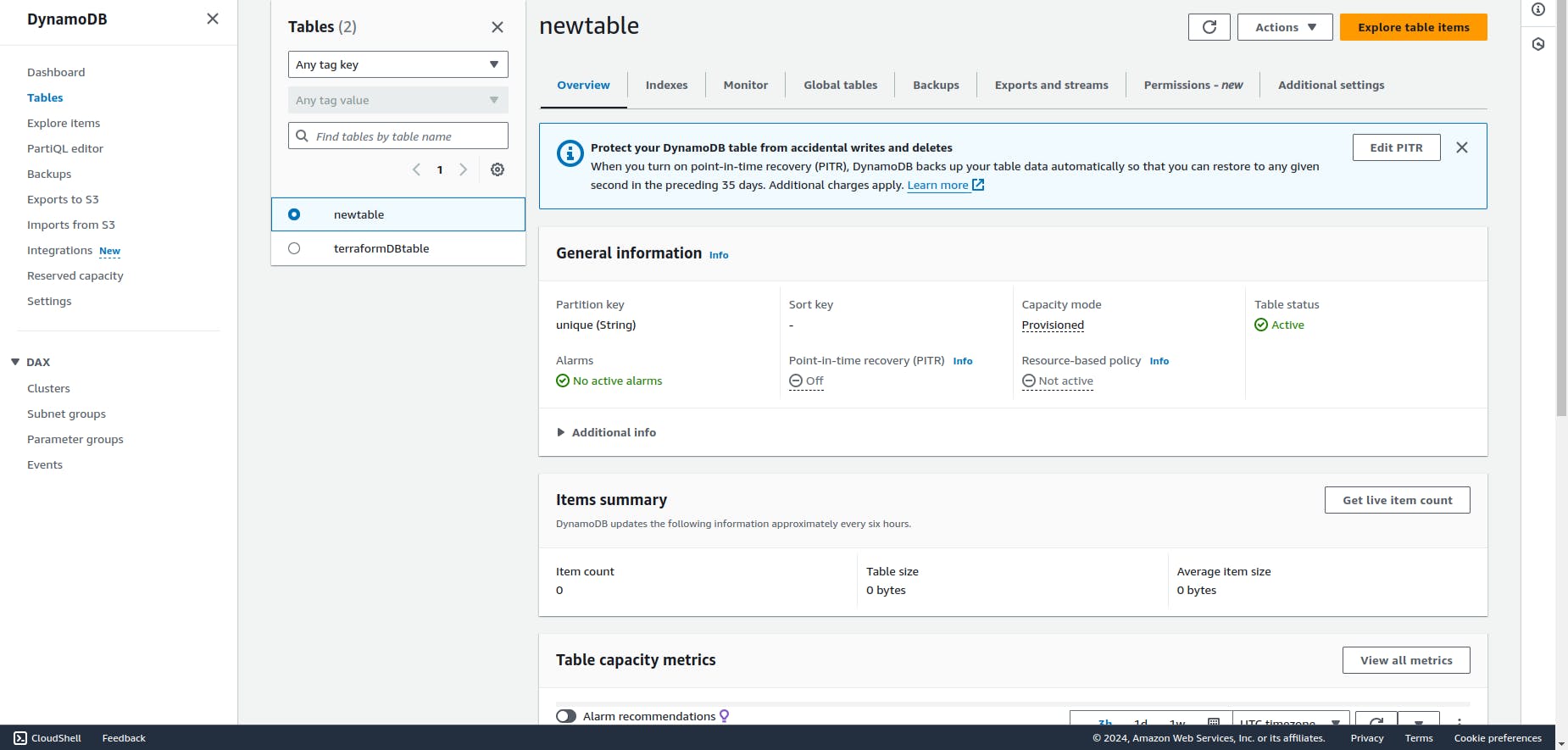

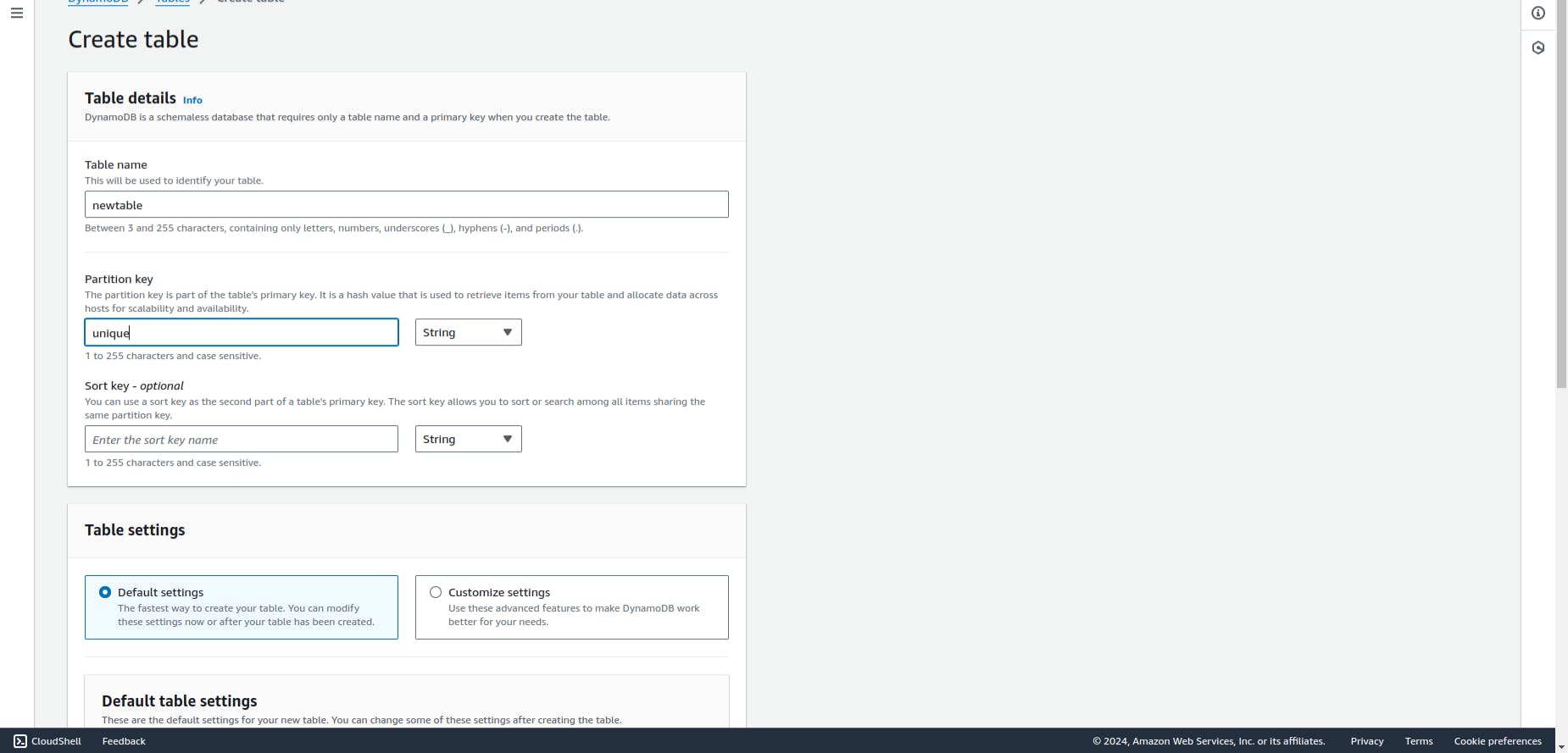

Step4 :- Create Dynamodb to store our data

Search Dynamodb in the search bar then click on it.

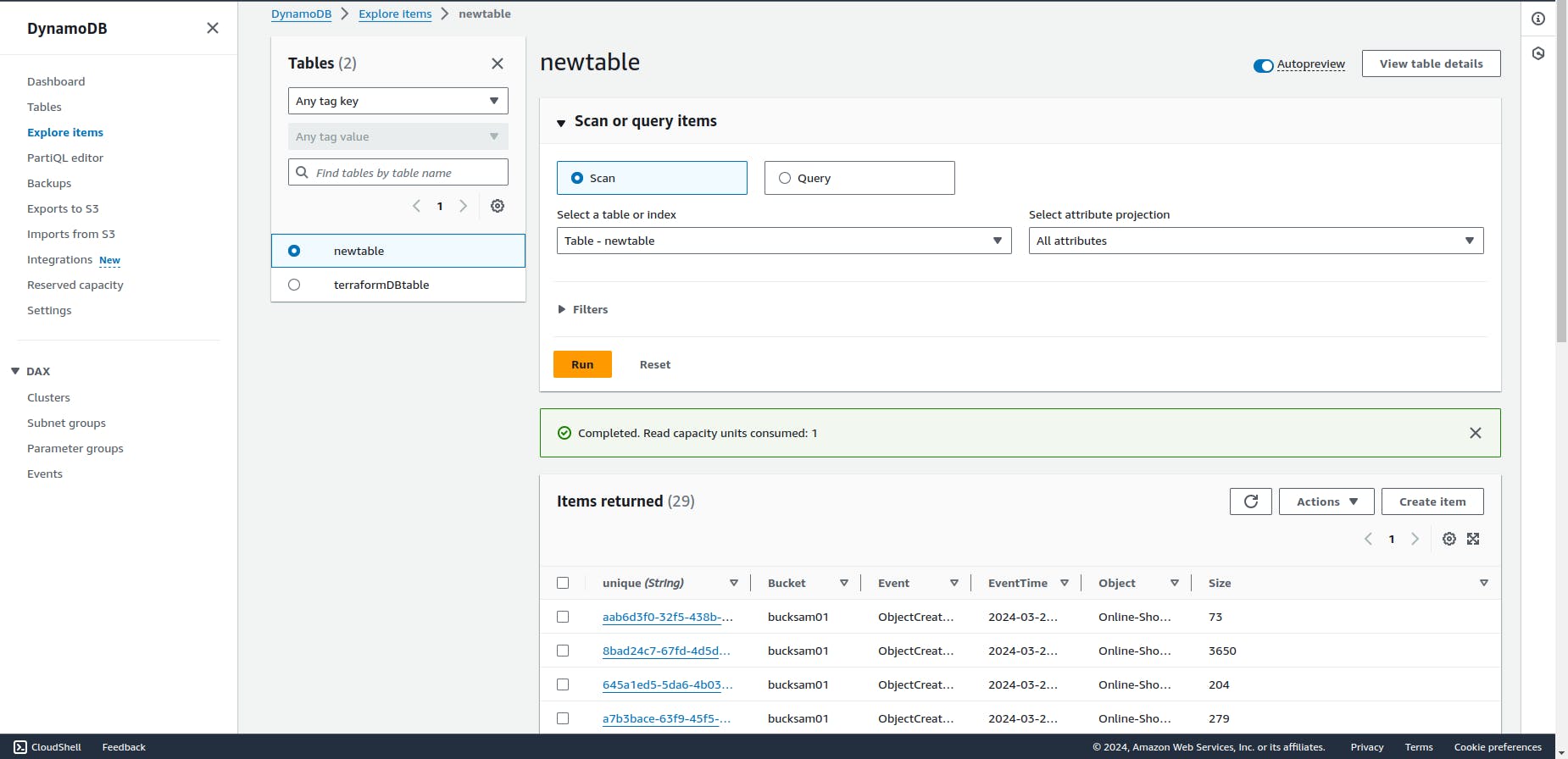

Here our item table is empty, To test our function with the configured trigger, we upload an object to our Amazon S3 bucket using the console. To verify that our Lambda function has been invoked correctly, then use CloudWatch Logs to view our function’s output.

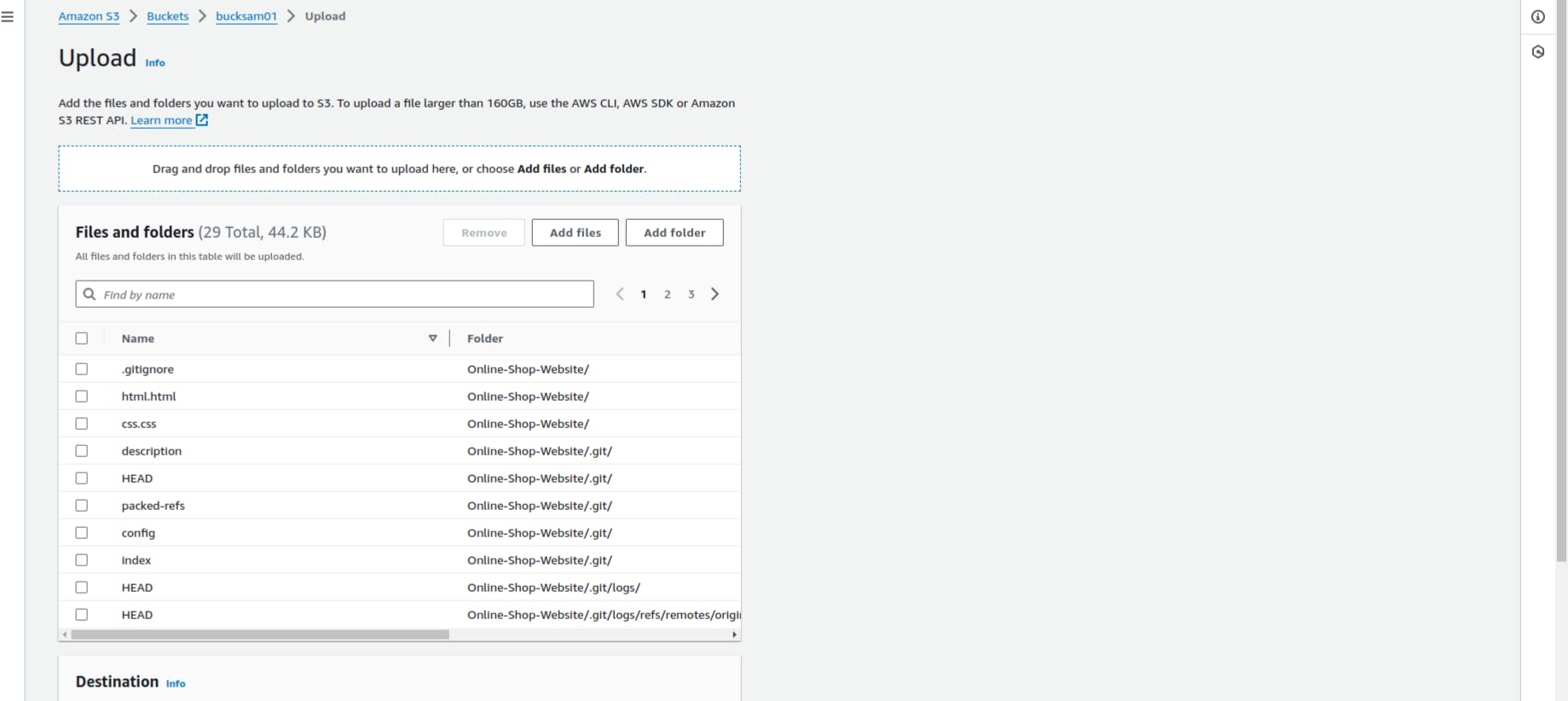

To upload an object to our Amazon S3 bucket

Open the buckets page of the Amazon S3 console and choose the bucket you created earlier.

Choose Upload.

Choose Add files and use the file selector to choose an object you want to upload. This object can be any file you choose.

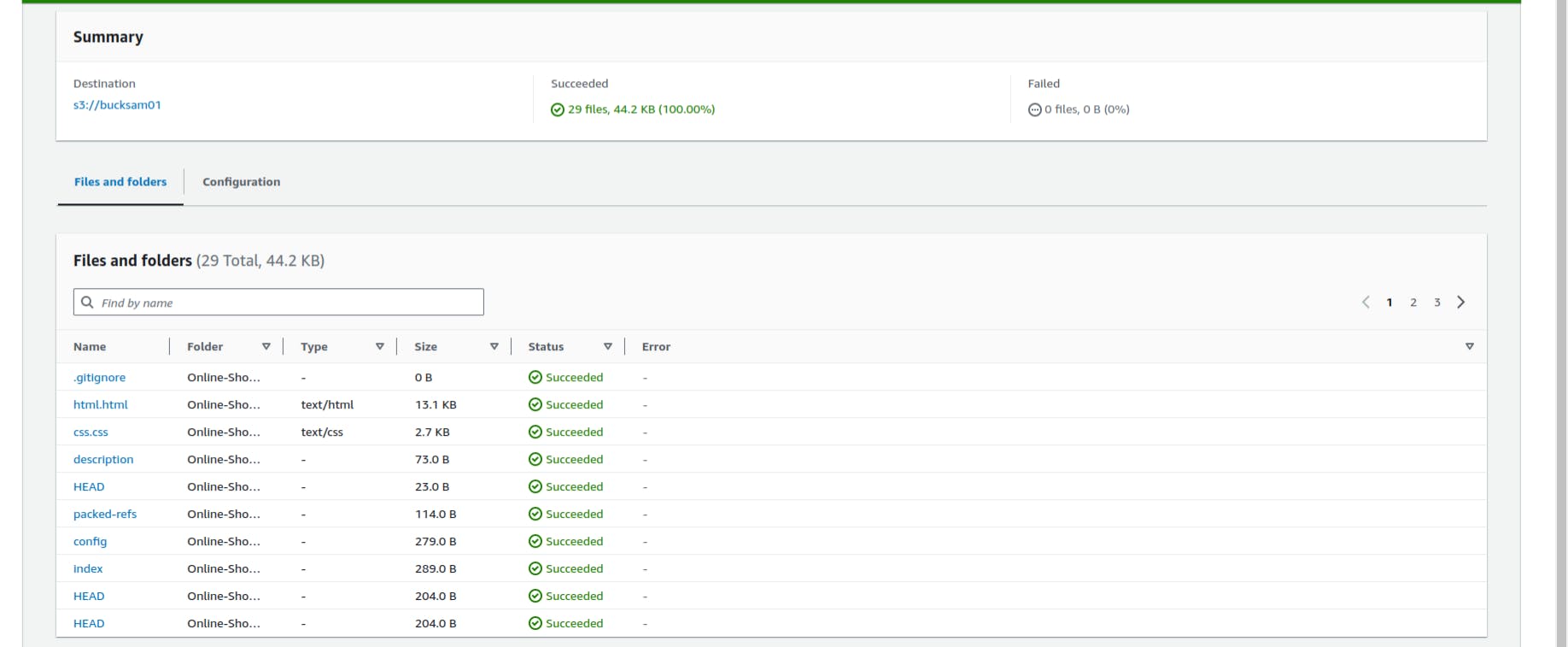

Choose Open, then choose Upload.

Here, We successfully upload our data inside S3 bucket. Now, we can check whether our lambda trigger our function and data store in dynamodb